More samples means better estimates.Įnable IncDet's gradient clipping to stabilise EWC training. We assume that Scilab 6.0.1 already launch with IPCV 2.0 loaded, as shown in the following figure: There is a tensorflow model shipped with the IPCV 2.0.

#TENSORFLOW PERMUTE MNIST HOW TO#

Number of dataset samples used to estimate weight importance for FIM. Let use look into how to load the tensorflow model in this tutorial. Threshold controlling when to freeze weights. More samples means better estimates.Įnable Fisher information masking to preserve accuracy on previous datasets.

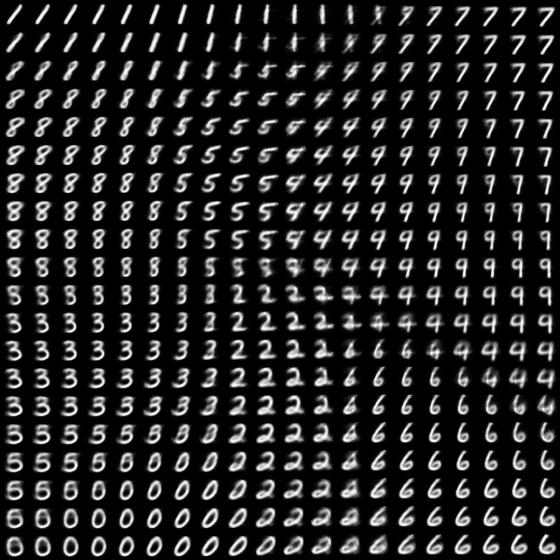

Number of dataset samples used to estimate weight importance for EWC. Relative importance of old tasks vs new tasks. Set this to lpipsFalse to equally weight all the features. This adds a linear calibration on top of intermediate features in the net. This dataset is designed to look like the classic MNIST dataset, while looking a. For backpropping, net'vgg' loss is closer to the traditional 'perceptual loss'. This notebook uses the notMNIST dataset to be used with python experiments. Number of dataset partitions/permutations to create.Įnable EWC to preserve accuracy on previous datasets. Network alex is fastest, performs the best (as a forward metric), and is the default. Options: "full", use the whole dataset throughout "permute", apply a permutation to pixels "increment", start with few classes and add more and more "switch", start with few classes and switch to different ones. How to change the dataset during training. Hence by default, this operation performs a regular matrix transpose on 2-D input Tensors. If perm is not given, it is set to (n-1.0), where n is the rank of the input tensor. The returned tensor's dimension i will correspond to the input dimension perm i. The keras R package makes it easy to use Keras and TensorFlow in R. Permutes the dimensions according to perm. Options: "mlp", simple multi-layer perceptron for MNIST "lenet", simple CNN for MNIST "cifarnet", simple CNN for CIFAR-10 and CIFAR-100.ĭataset to use. TensorFlow is a lower level mathematical library for building deep neural network architectures. tensorflow as tf (xtrain, ytrain), (xtest, ytest) tf.() xtrain. Initial learning rate for Adam optimiser. Use TensorFlow with DC/OS Data Science Engine. Number of iterations through training dataset.

Number of inputs to process simultaneously. The original paper also included a distillation loss term, which may help, but that is getting very close to iCaRL, which is beyond the scope of this project. I haven't seen much benefit from using gradient clipping in the simple examples here. Python3 main.py -epochs=15 -splits=3 -dataset-update=switch -ewc -ewc-lambda=0.01 -incdet -incdet-threshold=1e-6

0 kommentar(er)

0 kommentar(er)